Postgres Tool For Mac Best

Version 11.4:

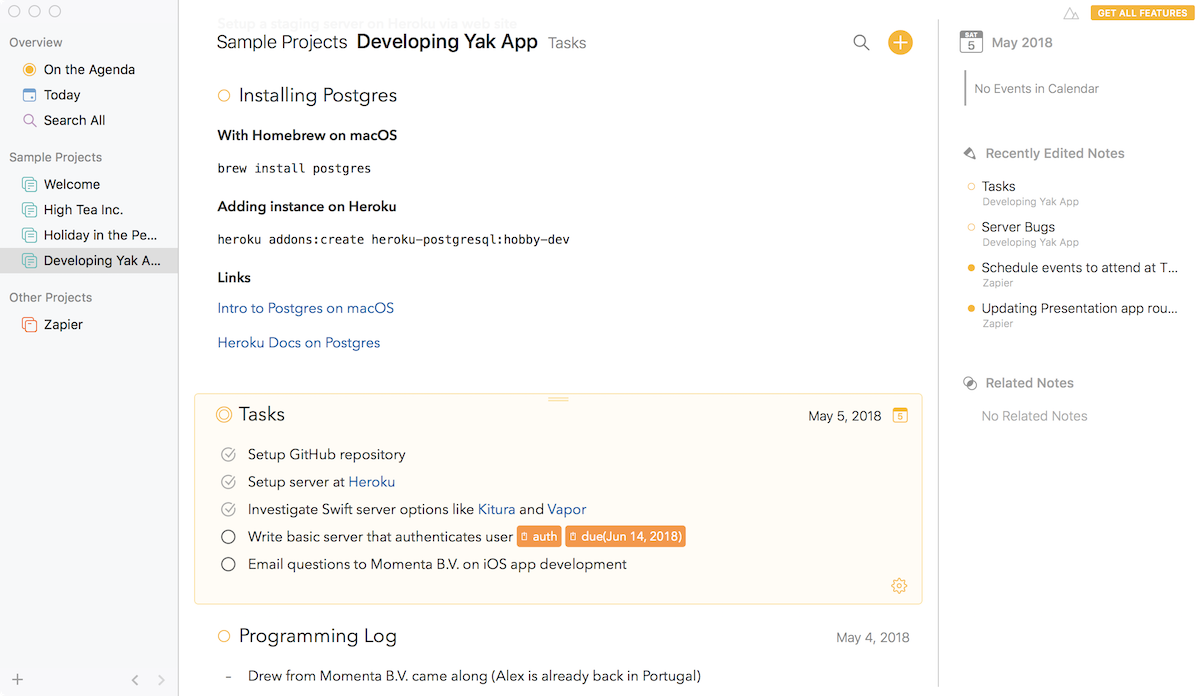

The PostgreSQL database backup tool allows users to select the objects to backup such as tables, views, indexes, functions, and triggers, the character encoding of the backup file, the SQL statement separator to use when generating the backup file, and whether to fully qualify object names in the generated SQL and DDL statements. SequelPro 1.1.2 – The Best MySQL Tool for Mac OS X Mojave. SQLPro for Postgres 1.0 – A Simple PostgreSQL Tool for Mac OS X. BestBackup.site use cookies to ensure that we give you the best experience on our website. Get the answer to 'What is the best alternative to PSequel: PostgreSQL GUI tool for Mac OS X?' What is the best alternative to PSequel: PostgreSQL GUI tool for Mac OS X? Here’s the Deal. Slant is powered by a community that helps you make informed decisions. Tell us what you’re passionate about to get your personalized feed and help. If you learn by example, like I do, then this is the best book to learn a complete solution. I have been trying to figure out how to put all the parts together for quite some time, but every resource gives just enough to create frustration.but NOT this book. PostgreSQL is an absurdly powerful database, but there's no reason why using it should require an advanced degree in relational theory. Postico provides an easy to use interface, making Postgres more accessible for newcomers and specialists alike. PostgreSQL, often simply Postgres, is an object-relational database management system (ORDBMS) with an emphasis on extensibility and standards compliance. It can handle workloads ranging from small single-machine applications to large Internet-facing applications (or for data warehousing) with many concurrent users; on macOS Server, PostgreSQL is the default database; and it is also available.

The client runs on Mac, Windows and Linux and supports a variety of database servers, including PostgreSQL.

Postgresql Mac Os

- Fix buffer-overflow hazards in SCRAM verifier parsing

- Any authenticated user could cause a stack-based buffer overflow by changing their own password to a purpose-crafted value. In addition to the ability to crash the PostgreSQL server, this could suffice for executing arbitrary code as the PostgreSQL operating system account.

- A similar overflow hazard existed in libpq, which could allow a rogue server to crash a client or perhaps execute arbitrary code as the client's operating system account.

- The PostgreSQL Project thanks Alexander Lakhin for reporting this problem. (CVE-2019-10164)

- Fix assorted errors in run-time partition pruning logic

- These mistakes could lead to wrong answers in queries on partitioned tables, if the comparison value used for pruning is dynamically determined, or if multiple range-partitioned columns are involved in pruning decisions, or if stable (not immutable) comparison operators are involved.

- Fix possible crash while trying to copy trigger definitions to a new partition

- Fix failure of ALTER TABLE ... ALTER COLUMN TYPE when the table has a partial exclusion constraint

- Fix failure of COMMENT command for comments on domain constraints

- Prevent possible memory clobber when there are duplicate columns in a hash aggregate's hash key list

- Fix incorrect argument null-ness checking during partial aggregation of aggregates with zero or multiple arguments

- Fix faulty generation of merge-append plans (Tom Lane)

- This mistake could lead to 'could not find pathkey item to sort' errors.

- Fix incorrect printing of queries with duplicate join names

- This oversight caused a dump/restore failure for views containing such queries.

- Fix conversion of JSON string literals to JSON-type output columns in json_to_record() and json_populate_record()

- Such cases should produce the literal as a standalone JSON value, but the code misbehaved if the literal contained any characters requiring escaping.

- Fix misoptimization of {1,1} quantifiers in regular expressions

- Such quantifiers were treated as no-ops and optimized away; but the documentation specifies that they impose greediness, or non-greediness in the case of the non-greedy variant {1,1}?, on the subexpression they're attached to, and this did not happen. The misbehavior occurred only if the subexpression contained capturing parentheses or a back-reference.

- Avoid writing an invalid empty btree index page in the unlikely case that a failure occurs while processing INCLUDEd columns during a page split (Peter Geoghegan)

- The invalid page would not affect normal index operations, but it might cause failures in subsequent VACUUMs. If that has happened to one of your indexes, recover by reindexing the index.

- Avoid possible failures while initializing a new process's pg_stat_activity data

- Certain operations that could fail, such as converting strings extracted from an SSL certificate into the database encoding, were being performed inside a critical section. Failure there would result in database-wide lockup due to violating the access protocol for shared pg_stat_activity data.

- Fix race condition in check to see whether a pre-existing shared memory segment is still in use by a conflicting postmaster

- Fix unsafe coding in walreceiver's signal handler

- This avoids rare problems in which the walreceiver process would crash or deadlock when commanded to shut down.

- Avoid attempting to do database accesses for parameter checking in processes that are not connected to a specific database

- This error could result in failures like 'cannot read pg_class without having selected a database'.

- Avoid possible hang in libpq if using SSL and OpenSSL's pending-data buffer contains an exact multiple of 256 bytes

- Improve initdb's handling of multiple equivalent names for the system time zone

- Make initdb examine the /etc/localtime symbolic link, if that exists, to break ties between equivalent names for the system time zone. This makes initdb more likely to select the time zone name that the user would expect when multiple identical time zones exist. It will not change the behavior if /etc/localtime is not a symlink to a zone data file, nor if the time zone is determined from the TZ environment variable.

- Separately, prefer UTC over other spellings of that time zone, when neither TZ nor /etc/localtime provide a hint. This fixes an annoyance introduced by tzdata 2019a's change to make the UCT and UTC zone names equivalent: initdb was then preferring UCT, which almost nobody wants.

- Fix ordering of GRANT commands emitted by pg_dump and pg_dumpall for databases and tablespaces

- If cascading grants had been issued, restore might fail due to the GRANT commands being given in an order that didn't respect their interdependencies.

- Make pg_dump recreate table partitions using CREATE TABLE then ATTACH PARTITION, rather than including PARTITION OF in the creation command

- This avoids problems with the partition's column order possibly being changed to match the parent's. Also, a partition is now restorable from the dump (as a standalone table) even if its parent table isn't restored; the ATTACH will fail, but that can just be ignored.

- Fix misleading error reports from reindexdb

- Ensure that vacuumdb returns correct status if an error occurs while using parallel jobs

- Fix contrib/auto_explain to not cause problems in parallel queries

- Previously, a parallel worker might try to log its query even if the parent query were not being logged by auto_explain. This would work sometimes, but it's confusing, and in some cases it resulted in failures like 'could not find key N in shm TOC'.

- Also, fix an off-by-one error that resulted in not necessarily logging every query even when the sampling rate is set to 1.0.

- In contrib/postgres_fdw, account for possible data modifications by local BEFORE ROW UPDATE triggers

- If a trigger modified a column that was otherwise not changed by the UPDATE, the new value was not transmitted to the remote server.

- On Windows, avoid failure when the database encoding is set to SQL_ASCII and we attempt to log a non-ASCII string

- The code had been assuming that such strings must be in UTF-8, and would throw an error if they didn't appear to be validly encoded. Now, just transmit the untranslated bytes to the log.

- Make PL/pgSQL's header files C++-safe